The AI Act: When Brussels Sets Global Standards (The "Brussels Effect")

Decoding the world's first global AI regulation. How to turn this regulatory constraint into an international competitive advantage?

Category

Artificial Intelligence, Compliance, Digital

Date

Nov 5, 2024

Governing the Algorithm: Europe as a Regulatory Pioneer

After the GDPR standardized global data protection, Europe is doing it again with the AI Act. The concept is simple: the "Brussels Effect." By imposing strict rules on a market of 450 million consumers, the EU de facto forces Silicon Valley giants to align. But for companies, the compliance challenge is colossal.

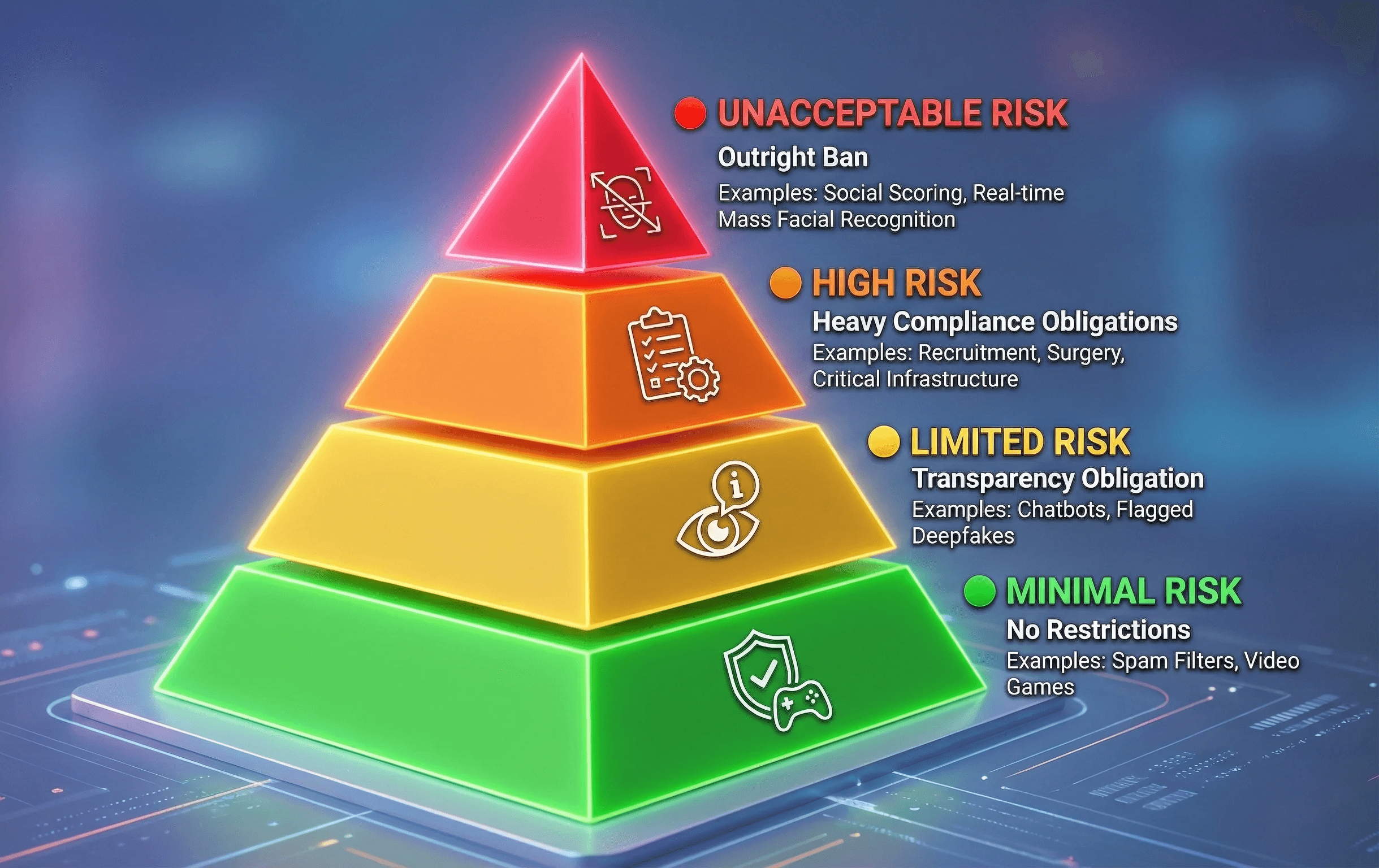

The Risk Pyramid: A Pragmatic Approach

The text classifies AI according to four risk levels. A classification error can cost up to 7% of global turnover.

🔴 Unacceptable Risk: Outright ban (e.g., Social Scoring, real-time mass facial recognition).

🟠 High Risk: Heavy compliance obligations (e.g., Recruitment, Surgery, Critical Infrastructure).

🟡 Limited Risk: Transparency obligation (e.g., Chatbots, flagged Deepfakes).

🟢 Minimal Risk: No restrictions (e.g., Spam filters, Video games).

Innovation Under Constraint

Many executives perceive this text as a brake. At Consilium & Potentia, we see it as a framework of trust. As indicated by the European Parliament, the goal is to create "Trustworthy AI."

Companies that integrate "Compliance by Design" today will have a major competitive advantage on international markets, where legal uncertainty still hinders the massive adoption of generative AI.

3 Immediate Actions for CIOs and Legal Departments

Map all AI systems used (including Shadow IT).

Audit suppliers (especially American ones) on their compliance with the AI Act.

Establish an internal algorithmic ethics committee.